HDR (High Dynamic Range) enhances brightness, contrast, and color depth, making images more realistic compared to Standard Dynamic Range (SDR). It allows deeper blacks, brighter highlights, and a wider color gamut, improving gaming, media, and professional workflows.

HDR vs. SDR: SDR monitors have a limited contrast ratio and color accuracy, while hdr monitors 4K deliver higher brightness (400-1000+ nits) and better local dimming. This results in more detail in dark and bright areas, reducing the loss of image quality in high-contrast scenes.

The Rise of HDR Monitors: Best PC gaming 4K monitor rtings show HDR adoption is growing due to improved panel technologies like OLED, QLED, and Mini-LED. Dolby Vision HDR and HDR10+ are becoming standard in gaming, content creation, and streaming, making high dynamic range display solutions more accessible.

What Are the Key HDR Standards and Certifications?

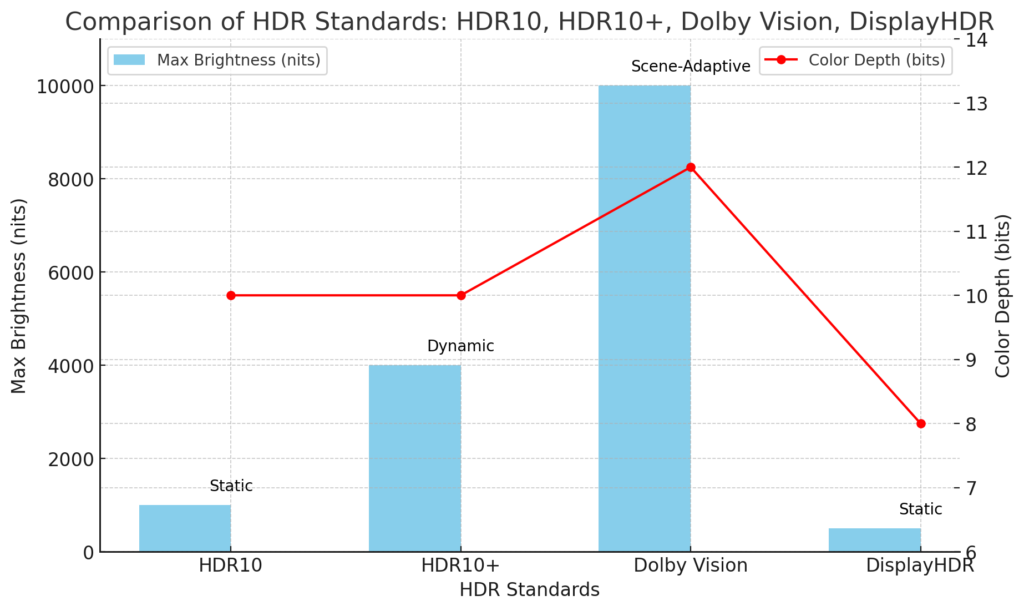

HDR standards define how displays handle brightness, contrast, and color depth. The most common certifications—HDR10, HDR10+, Dolby Vision HDR, and DisplayHDR—ensure varying levels of performance, but their differences impact real-world viewing quality.

HDR10 and HDR10+

HDR10 is the most widely used HDR format, offering a peak brightness of at least 1,000 nits on high-end models. It uses static metadata, meaning the same brightness and contrast settings apply across an entire video. In contrast, HDR10+ introduces dynamic metadata, adjusting scene-by-scene brightness for improved highlights and shadow details.

- HDR10 Monitor vs. HDR10 PC Monitor: Most hdr10 monitors meet the standard but may lack true 10-bit color. A hdr10 pc monitor is typically optimized for gaming and professional editing.

- HDR10+ Compatibility: While HDR10+ is an upgrade, fewer monitors and hdr gaming monitor 4k models support it compared to Dolby Vision HDR.

Dolby Vision HDR

Dolby Vision HDR enhances HDR quality by supporting 12-bit color depth and up to 10,000 nits of brightness, significantly outperforming HDR10 and HDR10+ in tone mapping and color accuracy(What is Dolby Vision and how is it different from HDR10?).

- Dolby Vision Monitor vs. Dolby Vision PC Monitor: While Dolby Vision monitors are optimized for content playback, a dolby vision pc monitor must ensure GPU and OS-level support to take full advantage of its capabilities.

- Dolby Vision Gaming on PC and Consoles: While some rtings best gaming monitor 2024 models support Dolby Vision HDR, game developers must integrate Dolby Vision for a true HDR experience. Future 4K HDR gaming monitors may adopt Dolby Vision for better in-game contrast and brightness optimization.

DisplayHDR Certifications

VESA’s DisplayHDR certification ranks HDR monitors based on brightness, contrast, and color performance. The key levels are:

| Certification | Peak Brightness | Color Gamut |

|---|---|---|

| DisplayHDR 400 | 400 nits | sRGB |

| DisplayHDR 600 | 600 nits | Wider color gamut, better contrast |

| DisplayHDR 1000 | 1,000 nits | True HDR experience with higher brightness and contrast |

- HDR600 Monitor vs. HDR 600 Monitor: Some hdr600 monitors do not meet true HDR performance due to low contrast ratios or poor local dimming.

- “Fake” HDR vs. True HDR: Many budget hdr monitors claim HDR support but lack high brightness levels or proper HDR tone mapping, leading to dull HDR performance.

Emerging or Proprietary Standards

New HDR technologies continue to develop, including Apple’s XDR, which stands for Extreme Dynamic Range.

- Dolby Vision HDR vs. HDR10: Which Is Better? While Dolby Vision HDR offers dynamic metadata, higher peak brightness, and better color accuracy, HDR10 is an open standard and more widely supported across devices.

- Apple’s XDR Technology: Apple’s XDR panels use custom local dimming zones and extreme brightness levels, competing with HDR monitors 4K that support Dolby Vision HDR.

Future hdr computer monitors will likely see better HDR performance, wider adoption of Dolby Vision, and higher brightness standards as technology advances.

What Are the Technical Requirements for HDR?

HDR functionality depends on hardware compatibility, display brightness, operating system support, and HDR content availability. To experience true high dynamic range display, users need compatible GPUs, proper cables, and monitors with sufficient brightness and contrast ratios.

Hardware Compatibility

Not all monitors and GPUs fully support 4K HDR. Ensuring compatibility requires checking:

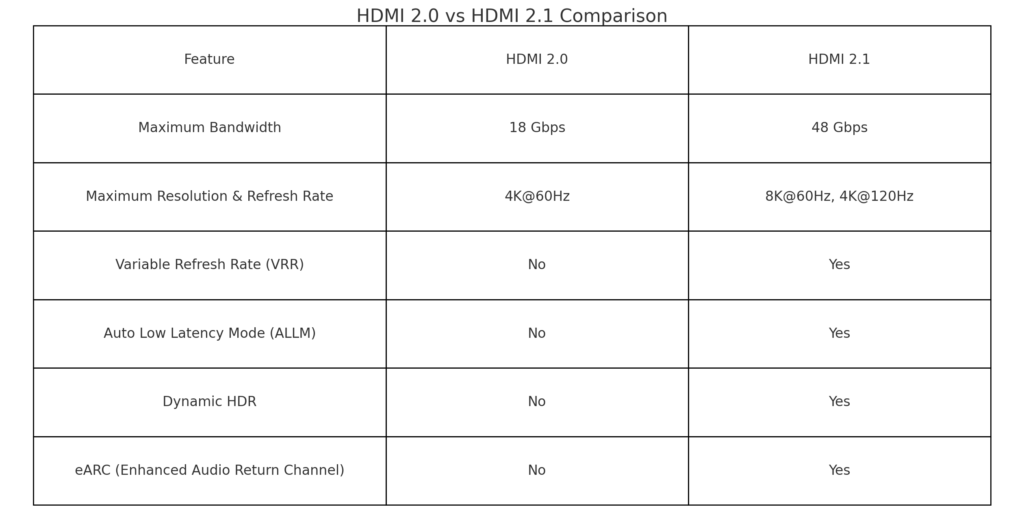

- HDMI 2.0 vs. HDMI 2.1: HDMI 2.0 supports 4K at 60Hz with HDR, while HDMI 2.1 allows 4K at 120Hz(What is HDMI 2.1 and how does it affect HDR performance?).

- DisplayPort for HDR: DisplayPort 1.4 supports HDR10 and HDR10+, but only DisplayPort 2.0 is fully ready for higher brightness HDR gaming.

- Dolby Vision PC Monitor vs. HDR10+ Monitor: A dolby vision pc monitor requires GPU-level Dolby Vision support, while an hdr10+ monitor depends on game and content integration for dynamic HDR performance.

Monitor Brightness and Nits

Brightness, measured in nits, is key to a true hdr computer display.

- How Many Nits Is a Monitor? HDR monitors range from **400 nits (basic HDR) to 1,000+ nits (true HDR performance)**.

- 1000 Nits Monitor vs. 2000 Nit Monitor: A 1000 nits monitor achieves DisplayHDR 1000, while a 2000 nit monitor is ideal for extreme high brightness HDR.

- Importance of Local Dimming: Full-array local dimming zones improve contrast, making hdr monitors display deeper blacks and brighter highlights.

Operating System and Software

Different operating systems handle HDR settings differently.

- HDR in Windows, macOS, and Consoles: Windows supports HDR10 and Dolby Vision, but macOS primarily uses HDR10. Game consoles like Xbox support Dolby Vision HDR, while PlayStation 5 prioritizes HDR10.

- Does My Monitor Support HDR? Check under Windows Display Settings or macOS System Preferences for HDR toggles. A monitor HDR 4K label does not always guarantee true HDR support.

- Common HDR Issues: Problems like washed-out colors or color banding occur when HDR settings are mismatched between the GPU, display, and operating system.

HDR Content Availability

Not all HDR content is created equal—streaming services, games, and Blu-ray discs vary in support.

- Where to Find True HDR Content? Platforms like Netflix, Disney+, and Apple TV+ offer Dolby Vision and HDR10.

- Dolby Vision Computer Monitor vs. HDR10 in Apps: Some games and streaming services prioritize Dolby Vision HDR, while others stick to HDR10 or HDR10+.

For true HDR performance, users must ensure full compatibility across their GPU, monitor, cables, and content sources.

How to Choose the Right HDR Monitor?

Selecting an HDR monitor depends on resolution, panel type, budget, and setup requirements. The ideal choice balances screen size, brightness, local dimming, and HDR certification for the best viewing experience.

Resolution and Size

The trade-off between 1080p HDR and 4K HDR is crucial for display clarity and HDR effectiveness.

- 1080p HDR vs. 4K HDR Monitors: 4K HDR monitors provide higher pixel density and better HDR effects due to improved local dimming and color accuracy. 1080p HDR monitors may lack true HDR benefits, as HDR relies on brightness and contrast more than resolution.

- Larger Screens and Viewing Distance: 32-inch and 43-inch HDR monitors offer immersive HDR but require proper viewing distance to avoid pixelation in lower resolutions. A 32-inch monitor 4K HDR maintains sharp visuals, while a 43-inch 4K HDR monitor is suited for console gaming or media consumption.

Panel Technologies

Panel type affects HDR performance, brightness, and color accuracy.

- IPS vs. VA vs. OLED vs. QLED:

- IPS: Best for color accuracy and wide viewing angles, but lower contrast.

- VA: Superior contrast ratio, making HDR effects more pronounced.

- OLED: True HDR performance with perfect blacks and infinite contrast but can suffer from burn-in.

- QLED: Higher brightness (1000+ nits) and improved HDR color reproduction.

- Best Panel for Different Uses: Gaming monitors favor OLED or high-refresh VA panels, while professional editing requires IPS or QLED with accurate color calibration.

Ease of Setup and Calibration

A well-calibrated HDR monitor improves brightness, contrast, and color accuracy.

- Built-in HDR Profiles vs. Manual Tweaking: Some HDR monitors 4K gaming include factory-calibrated HDR profiles, while others require manual settings adjustments for optimal visuals.

- User-Friendly OSD (On-Screen Display) Settings: A good HDR monitor provides intuitive controls for brightness, HDR mode selection, and local dimming settings.

How Do HDR, DCR, and XDR Compare?

HDR, DCR, and XDR represent different technologies for enhancing contrast and brightness in monitors. While HDR follows industry standards, DCR adjusts contrast dynamically, and XDR pushes brightness and dimming to an extreme level for professional applications.

HDR (High Dynamic Range)

HDR expands contrast and color depth beyond SDR (Standard Dynamic Range) to create deeper blacks and brighter highlights.

- Industry Standards: The most common hdr monitors follow HDR10, HDR10+, Dolby Vision HDR, and DisplayHDR certifications.

- Widespread Availability: 4K HDR computer monitors and hdr gaming monitors 4K are available across consumer and professional markets, ensuring better brightness, color accuracy, and local dimming performance.

DCR (Dynamic Contrast Ratio)

DCR adjusts the backlight dynamically in real time to enhance contrast but does not meet true HDR performance levels(What Is DCR on a Monitor?).

- How It Works: DCR monitors modify brightness based on on-screen content, creating a perceived contrast boost.

- Limitations: Unlike high dynamic range monitor technology, DCR can introduce halo effects, brightness fluctuations, and artificial contrast shifts, making it less ideal for HDR gaming and video editing.

XDR (Extreme Dynamic Range)

XDR represents ultra-high brightness and advanced local dimming, primarily seen in professional-grade displays.

- Beyond Standard HDR: XDR monitors achieve peak brightness well above HDR10, often exceeding 1,600 nits or more for high dynamic range 4K workflows.

- Professional Use Cases: XDR technology is found in color-critical monitors, such as Apple’s XDR displays, which cater to video editing, design, and high-end content creation.

Key Differences and Use Cases

| Feature | HDR | DCR | XDR |

|---|---|---|---|

| Industry Standards | HDR10, HDR10+, Dolby Vision, DisplayHDR | Manufacturer-implemented | Proprietary (Apple XDR, high-end HDR monitors) |

| Brightness Range | 400-1000+ nits | Adjusts dynamically, typically lower peak brightness | 1600+ nits, ultra-high brightness |

| Best For | Gaming, media consumption, general use | Budget displays needing better perceived contrast | Professional workflows, high-end video editing |

Practical Advice: For most users, a properly certified HDR monitor 4K is the best balance of quality and affordability. DCR can enhance budget monitors, but true HDR performance requires proper brightness and local dimming. XDR is best for professionals needing the highest contrast accuracy and brightness levels.

How to Properly Set Up and Calibrate an HDR Monitor?

Setting up HDR correctly requires adjusting hardware settings, color calibration, and viewing conditions. A properly configured hdr computer display ensures accurate contrast, brightness, and color depth across different applications.

Enabling HDR

HDR must be activated through both software settings and the monitor’s on-screen display (OSD)(How to check if your monitor supports HDR?).

- Windows/macOS & Consoles: In Windows, enable HDR in Settings > Display > Use HDR. On macOS, check System Preferences > Displays > HDR. Console users should ensure HDR is activated in dashboard settings.

- Monitor OSD Settings: Many hdr monitors 4K have dedicated HDR color profiles. Verify HDR mode is enabled in the OSD to prevent incorrect brightness scaling.

Color Calibration and Brightness

Calibrating HDR settings ensures accurate color reproduction and comfortable brightness levels.

- Using a Colorimeter or Built-in Calibration Tools: Devices like the X-Rite i1Display or SpyderX optimize HDR color accuracy and white balance. Some best HDR computer monitors include factory-calibrated HDR presets.

- Adjusting Peak Brightness: HDR monitors 4K gaming often reach 600-1000 nits, but excessive brightness can cause eye strain. Reduce peak brightness if viewing in a dim room.

Troubleshooting Common HDR Issues

Many users experience washed-out colors, black crush, or inconsistent HDR playback.

- Fixing Washed-Out HDR: Ensure HDR mode matches content format (HDR10, Dolby Vision, etc.). Disable Windows Auto HDR if colors appear dull.

- Black Crush and Shadow Detail Loss: Some rtings best gaming monitors allow gamma and black level adjustments to restore shadow detail.

Viewing Environment

Ambient lighting affects HDR contrast and eye comfort.

- Managing Glare and Reflections: A high dynamic range display performs best in controlled lighting. Avoid direct light sources behind or in front of the screen.

- Reducing Eye Fatigue: Adjust room brightness and monitor positioning to prevent HDR-induced eye strain, especially on 1000 nits monitor or 2000 nit monitor models.

For optimal HDR performance, ensure hardware compatibility, precise calibration, and a well-lit environment. Proper settings allow 4K HDR computer monitors to display rich colors, deep contrast, and accurate brightness levels.

Conclusion

HDR technology enhances brightness, contrast, and color depth, making images more realistic than SDR. Choosing the right HDR monitor requires understanding certifications, panel types, brightness levels, and software compatibility. Proper setup and calibration ensure the best HDR performance for gaming, content creation, and media consumption.

FAQ

1. Do I need a new graphics card for HDR?

Most modern GPUs support HDR, but older cards may not output the correct signal. Check your GPU’s specifications or driver updates to confirm HDR compatibility.

2. Is HDR always brighter than SDR?

HDR allows for higher peak brightness, but it also focuses on deeper blacks. Proper HDR balances these extremes rather than just making the screen brighter overall.

3. Can I enable HDR on any monitor?

No. Your monitor must have HDR support and meet minimum brightness/contrast specs. Merely switching on HDR settings without true hardware support won’t deliver a real HDR effect.

4. Does HDR require specific cables?

HDR typically requires high-bandwidth cables like HDMI 2.0 (or newer) or DisplayPort 1.4. Older cables may not reliably support higher data throughput for HDR signals.

5. Will HDR cause more eye strain?

Some users feel increased brightness can lead to eye fatigue, but you can mitigate this by lowering room lights, adjusting monitor brightness, or taking regular breaks.

6. Are there downsides to using DCR instead of HDR?

DCR can help improve contrast on non-HDR monitors, but it may produce inconsistent brightness and lacks the refined color range of true HDR.

7. What if HDR content looks “washed out”?

Check your OS and game settings to ensure HDR is enabled properly, and verify the monitor’s color profile. Sometimes manual calibration is needed to fix dull or faded visuals.

8. Is XDR overkill for regular home use?

XDR delivers extremely high brightness and contrast, typically reserved for professional content creation. For everyday tasks and gaming, standard HDR is usually sufficient.