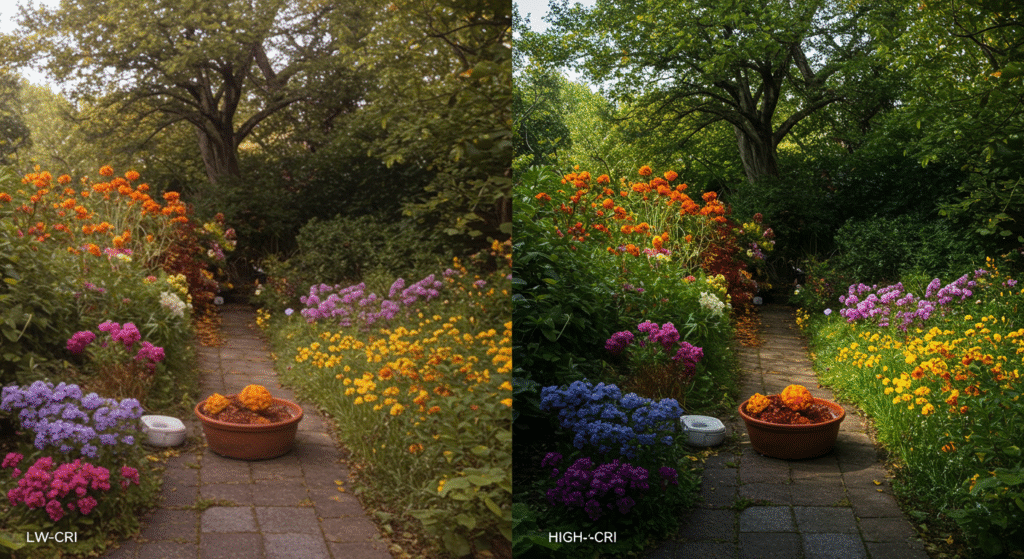

CRI (Color Rendering Index) measures how accurately a light source shows colors compared to natural daylight, on a scale from 0 (poor accuracy) to 100 (perfect accuracy). A high CRI rating means the colors you see on the screen match closely with how they appear in real life.

LCD backlights with a high CRI rating (90 or above) display colors accurately and naturally. This accuracy is needed for tasks like graphic design, video production, and medical imaging, where incorrect colors can cause mistakes or reduce quality.

Choosing LCD screens with lower CRI (below 80) can cause colors to seem dull, faded, or incorrect. This problem can negatively affect everyday tasks and professional work, leading to eye strain or inaccurate results.

What Is CRI and Why Does It Matter for Lighting?

CRI (Color Rendering Index) measures how accurately a light source reveals the colors of objects compared to natural daylight, rated on a scale from 0 to 100. A higher CRI rating means the colors appear closer to how they look under daylight, making it easier to distinguish subtle color differences clearly.

When selecting bulbs, a CRI rating between 80 to 90 is typically suitable for general indoor lighting, while specialized tasks requiring precise color evaluation demand high CRI lamps of 90 or above. Lighting professionals prefer bulbs with high CRI values because they provide more accurate color representation, which is critical in design studios, retail shops, and medical facilities.

CRI Scale and Its Practical Meaning:

The CRI scale ranges from 0 (poor color rendering) to 100 (perfect color rendering), and most indoor bulbs fall between 80 and 95. A CRI rating of 90 or higher is ideal for tasks such as photography, painting, or medical examinations where accurate color judgment is needed, while ratings around 80 are suitable for general home and office use.

How Does CRI Differ from Color Temperature?

CRI differs from color temperature in that it describes color accuracy, while color temperature indicates whether a light appears warm (yellowish, 2700K–3000K) or cool (bluish, 5000K–6500K). For example, two bulbs can have identical color temperatures but different CRI ratings, resulting in one providing better color accuracy and clarity despite both appearing equally cool or warm.

Why Does CRI Matter for LCD Backlight Quality?

CRI (Color Rendering Index) matters for LCD backlight quality because it determines how accurately colors are displayed on screens compared to natural daylight. High CRI backlights ensure accurate and vibrant colors, while low CRI backlights can result in muted or distorted colors, reducing visual accuracy.

Professionals working in fields like graphic design, photography, or medical imaging rely heavily on displays with a CRI rating above 90 to ensure color precision for critical tasks. Displays with a low CRI, typically around 70 or lower, often produce color inaccuracies, negatively affecting daily tasks and user satisfaction.

What Happens When LCD Backlights Have Low CRI?

When LCD backlights have a low CRI rating, colors displayed can appear washed out, dull, or incorrectly tinted, impacting visual clarity and user comfort. In professional settings, this means critical tasks such as photo editing, digital artwork, or medical diagnostics become unreliable due to incorrect color representation, leading to potential errors and decreased productivity.

Technical Difference Between High and Low CRI Backlights

High CRI backlights emit a broad spectrum of light that closely resembles natural daylight, enhancing color richness and accuracy, while low CRI backlights emit a narrower spectrum, limiting accurate color reproduction. For instance, standard LCD displays using backlights with a CRI around 80 might struggle to reproduce reds and skin tones accurately, whereas specialized displays using high CRI backlights (90+) consistently deliver precise color rendering, crucial for tasks like digital imaging and professional video editing.

| CRI Rating | Typical Usage | Color Quality Observed |

|---|---|---|

| 90 – 100 | Professional photography, medical screens | Accurate, vibrant, realistic colors |

| 80 – 89 | Office monitors, consumer TVs | Good general-purpose color, minor inaccuracies noticeable |

| Below 80 | Basic consumer displays | Dull, muted colors, noticeable inaccuracies and distortions |

What's the Difference Between CRI and Color Temperature?

CRI (Color Rendering Index) measures how accurately colors appear under a light source, while color temperature (measured in Kelvin) describes whether a light appears warm (yellowish) or cool (bluish). These two metrics interact in displays, where both accurate color representation (high CRI) and suitable warmth or coolness (appropriate Kelvin rating) influence visual comfort and image accuracy.

In display technology, a high CRI value (above 90) ensures colors look accurate and realistic, while color temperatures between 5000K to 6500K are common for screens to replicate daylight conditions comfortably. Misunderstandings arise when buyers confuse a screen’s high Kelvin rating (cooler color) with better color accuracy, but a screen can have a cool tone and still have low color accuracy if it has a low CRI rating.

Common Misconceptions About CRI and Color Temperature

Many consumers mistakenly think a higher color temperature (e.g., 6500K) automatically means better color accuracy, but color temperature only controls how warm or cool the image looks, not how true-to-life colors appear. Displays marketed with high color temperature as “daylight-like” often neglect to disclose their actual CRI rating, which can lead users to choose screens that show inaccurate colors despite appearing bright and crisp.

What Are the Limitations of CRI and Are There Other Useful Metrics?

CRI has limitations because it calculates accuracy based on only eight standard color samples (R1–R8) and may not accurately reflect performance across all hues, particularly deep reds or saturated colors. Alternative metrics like TM-30, CQS (Color Quality Scale), and the R9 value have emerged to address these shortcomings, providing a more comprehensive analysis of color rendering, particularly for specialized displays used in professional applications.

| Alternative Metric | Technical Benefit | Recommended Application |

|---|---|---|

| TM-30 | Uses 99 color samples, providing detailed accuracy | Graphic design, photography displays |

| CQS | Considers color saturation, not just accuracy | Retail, fashion industry displays |

| R9 Value | Specifically measures deep red accuracy | Medical imaging, critical color editing |

What Is a Good CRI Rating for LCD Backlights?

| Application Type | Recommended CRI Rating |

|---|---|

| General Consumer Use | 80–89 |

| Photography and Graphic Design | 90–95 |

| Medical Imaging | 95–98 |

How to Accurately Interpret CRI Ratings on Product Specifications?

CRI ratings listed on product specifications refer to how closely the backlight reproduces colors compared to natural daylight, with 100 being a perfect score. When reviewing specs, look specifically for clearly stated CRI ratings above 90 if color precision is a requirement. Be cautious of vague terms like “natural colors” or “daylight-like,” as these claims may not accurately reflect the actual CRI rating.

How to Identify Reliable High-CRI Backlights and Avoid Misleading Marketing?

Reliable high-CRI backlights typically have clear numerical ratings (e.g., CRI >90) listed explicitly on product specification sheets, often supported by independent testing certifications. Be wary of displays using vague descriptions like “premium color,” “true-to-life colors,” or omitting clear CRI ratings altogether, as these can indicate lower actual CRI values hidden behind marketing language.

How Does LED Spectral Quality Influence CRI Scores in LCD Backlights?

The spectral quality of LEDs directly affects the CRI (Color Rendering Index) scores in LCD backlights because CRI evaluates how closely the emitted spectrum matches natural daylight. LEDs with broader and more balanced spectra usually achieve higher CRI ratings, providing accurate and consistent color representation.

Achieving high CRI ratings (90 or above) often requires engineering compromises, such as lower luminous efficacy (lumens per watt) or reduced device lifespan. These trade-offs happen because expanding LED spectra to cover more wavelengths typically involves additional phosphors or specialized materials, which can reduce brightness efficiency and accelerate aging.

Technical Consideration: LEDs optimized for high CRI typically use advanced phosphor blends or quantum-dot technologies, resulting in spectral distributions that closely resemble natural daylight. However, such LEDs generally deliver lower luminous efficacy—often 10%-20% lower—and experience faster phosphor degradation, impacting long-term color consistency.

How Does CRI Interact with Display Gamut and Brightness?

Higher CRI ratings can enhance perceived color gamut and brightness accuracy, making colors appear more natural and detailed. However, optimizing CRI alongside other parameters like brightness and color gamut presents technical challenges, as improvements in one area often lead to compromises elsewhere in the display’s performance.

- Increasing CRI often involves adjusting LED spectra, which may slightly reduce peak brightness due to lower luminous efficacy.

- Enhancing spectral quality for better CRI can affect the display’s maximum achievable gamut, since precise spectral tuning limits the available color space range engineers can achieve practically.

- Engineers must balance these factors carefully, sometimes utilizing advanced solutions like quantum-dot enhancements or specialized phosphors to maintain acceptable brightness, gamut, and CRI simultaneously.

Does High CRI Lighting Improve Eye Comfort and Overall Wellbeing?

High CRI lighting can enhance eye comfort because it accurately reproduces colors similar to daylight, reducing eye strain and visual fatigue associated with poor or unnatural color rendering. Improved color accuracy also positively influences mood and productivity by creating a visually natural environment.

Professionals who work extensively with screens or under artificial lighting often find that using high CRI bulbs (CRI 90 or above) helps maintain comfortable vision during prolonged tasks. Accurate color rendering minimizes visual stress caused by distorted hues or poor contrast, supporting better concentration and reducing fatigue symptoms.

Environmental Implications of High-CRI LED Production and Usage

The manufacturing of high-CRI LEDs typically involves more complex phosphor formulations or specialized materials, which can lead to slightly increased environmental impact compared to standard LEDs. However, once operational, these LEDs offer similar energy efficiency and longevity, balancing the initial environmental considerations with responsible energy usage during their lifetime.

- Producing high-CRI LEDs may require additional rare-earth phosphors or advanced quantum dots, leading to modest increases in resource extraction and production complexity.

- Despite the initial complexity, operational efficiency typically remains comparable to standard LEDs, ensuring responsible long-term energy consumption and reduced environmental footprint during usage.

What CRI Rating Should You Choose for Your Specific Application?

The ideal CRI (Color Rendering Index) rating depends on your specific application: general home and office use typically requires ratings around 80–89, while tasks like professional photography, medical diagnostics, and detailed graphic design demand higher accuracy with ratings of approximately 90–98.

Selecting the correct CRI rating ensures color accuracy suited to your tasks, enhancing visual clarity and reducing errors related to inaccurate color perception. Lower CRI ratings (around 80–85) offer acceptable color for standard tasks, while higher ratings (90–98) become necessary when precise color accuracy directly impacts your job performance.

Recommended CRI Ratings and Backlight Selections by Use Case

| Application Type | Recommended CRI Rating | Technical Details & Guidance |

|---|---|---|

| Home and Office Environments | 80–89 | Balances comfort and cost-effectiveness; sufficient for daily tasks like document viewing, web browsing, and casual multimedia. |

| Professional Photography & Graphic Design | 90–95 | Ensures precise color matching and editing accuracy; critical for tasks involving detailed color evaluation like photo editing and graphic production. |

| Medical Imaging and Diagnostics | 95–98 | Provides highly accurate color reproduction crucial for correct diagnosis; higher CRI ensures medical images are displayed with minimal color distortion. |

| Industrial and Control Displays | 85–90 | Good clarity and reliable color distinction for operational safety, control monitoring, and machinery interfaces without needing maximum color accuracy. |

Technical Considerations for High-Accuracy Applications

For applications like medical diagnostics or professional photography, choosing a backlight with CRI ratings of 95 or higher ensures minimal deviation from natural color perception. These specialized displays typically employ advanced LED technologies or quantum-dot enhancements to deliver consistent, accurate color rendering over extended use, despite slight trade-offs in efficiency or lifespan.

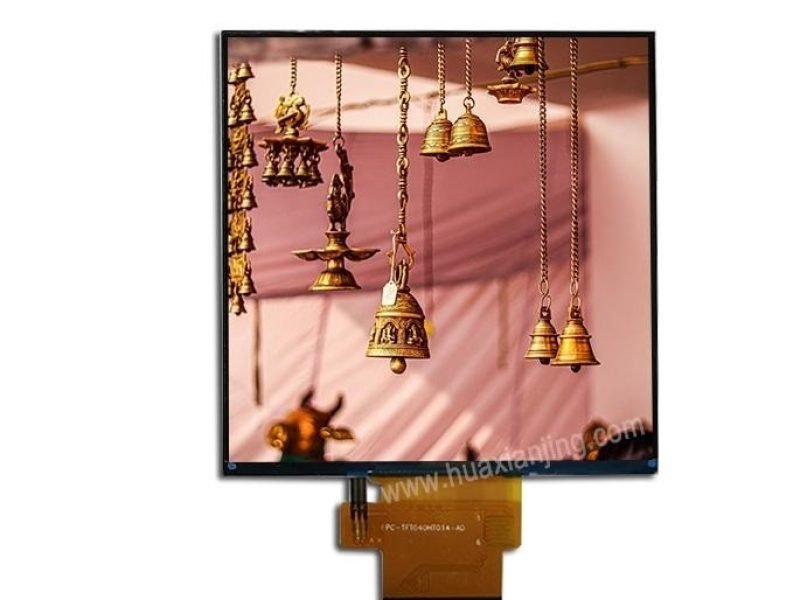

Where Can You Find Visual Demonstrations and Resources About CRI?

You can find visual CRI demonstrations and resources through specialized colour rendering index charts and comparison graphs, which clearly show the differences in color accuracy between various CRI ratings and their interactions with color temperature. These visual tools help you understand how different CRI ratings impact realistic color representation in practical scenarios.

Reviewing CRI color charts can make it easier to identify the practical impact of varying CRI levels, showing precisely how colors appear at different CRI ratings (e.g., 70, 80, 90, or 95+). These visual comparisons often include side-by-side images of common objects under different lighting, clearly highlighting the enhanced realism achieved with high CRI lamps.

Recommended Visual Tools for Understanding CRI:

- Colour Rendering Index Chart: Clearly illustrates how individual colors (R1–R8, and sometimes R9) appear at various CRI ratings.

- CRI vs. Color Temperature Visualization: Useful for showing how color temperature (Kelvin) and CRI ratings together affect perceived image quality and color accuracy, helping avoid common misconceptions.

- Sample Color Charts: Practical examples showing everyday items (skin tones, fruits, textiles) illuminated at different CRI ratings (80, 90, 95+) to highlight the visual differences clearly.

Sources for Technical Standards and Further Reading:

For deeper technical understanding, you should consult authoritative standards bodies and technical references, such as:

- CIE (International Commission on Illumination): Responsible for defining and standardizing the Color Rendering Index (CRI) metric.

- IES (Illuminating Engineering Society): Publishes the newer TM-30 standard, a more detailed alternative to traditional CRI.

- ANSI (American National Standards Institute): Provides official documentation and standards related to lighting and color accuracy in display technology.

Related Articles:

How Should You Drive Backlight in an Embedded LCD Display?

What Is a Frame Buffer and How Does It Work in Embedded Systems?

How Do You Calculate the Dot Clock for an LCD Display?

How is Parity Error Detection in LCD Frame Buffers Tested During Memory Stress?

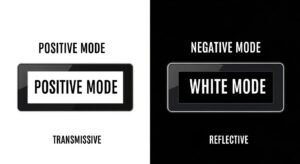

Why Are Circular Polarizers Used in Reflective and Transflective LCDs?

FAQ

How to Identify Reliable High-CRI Backlights and Avoid Misleading Marketing?

Reliable high-CRI backlights typically have clear numerical ratings (e.g., CRI >90) listed explicitly on product specification sheets, often supported by independent testing certifications. Be wary of displays using vague descriptions like “premium color,” “true-to-life colors,” or omitting clear CRI ratings altogether, as these can indicate lower actual CRI values hidden behind marketing language.

Are High-CRI Backlights Fully Compatible with All LCD Hardware?

Most high-CRI backlights are fully compatible with common LCD hardware, but integrating specialized high-CRI LEDs might require minor adjustments in driver electronics or color calibration software. Therefore, verifying compatibility with existing equipment specifications and ensuring proper calibration is necessary when switching to high-CRI backlights.

Do High-CRI LEDs Have Shorter Lifespans or Reduced Efficiency?

High-CRI LEDs can have slightly reduced luminous efficacy (lumens per watt) compared to standard LEDs due to their broader spectral output. Lifespan may also be somewhat shorter, typically around 5%–10% reduction, because more complex phosphor layers or quantum-dot materials degrade slightly faster over extended usage periods.

Does CRI Performance Change Over the Life of the Backlight?

CRI performance can gradually decline as the backlight ages, primarily due to the degradation of phosphors or quantum-dot materials that alter the original spectral output. Usually, this reduction is minimal, with CRI ratings decreasing by approximately 2–4 points over typical operating lifetimes (20,000–50,000 hours), which is acceptable for most general and professional applications.